- Kavin Sharma

- August 13, 2025

January 27, 2026

Cloud Modernization

Kavin Sharma

When I started working on connectors in a recent project, the job sounded simple:

“Add integrations so users can sync data.”

The first few connectors were exactly that. We used Meltano (Singer taps) to get moving fast, and it worked great in the beginning. You pick a tap, configure credentials, run the pipeline, and you’ve got data.

Then we added more connectors. And then more users started running them on schedules.

What started as simple data connectors quickly became a challenge in

building scalable connector libraries that could survive real-world production workloads.

If your integrations involve older platforms, this ties closely to integrating legacy systems with modern digital services, the same scaling pressure shows up there too.

That’s when the real work began.

If you’re in the middle of modernizing ingestion end-to-end, our migration story in Rethinking ETL: Why We Migrated Our Legacy Pipelines to Apache Spark adds the broader pipeline context.

Meltano and Singer taps are excellent for bootstrapping ETL connectors but scaling them exposed orchestration and state management gaps.

Meltano was a strong starting point for building a connector library quickly. It helped us integrate multiple sources without writing everything from scratch, and it gave us a common pattern for extraction.

But scaling exposed a few repeating issues - not “Meltano is bad” issues, just the kind of problems that show up when your system moves from “a few manual runs” to “many automated syncs”.

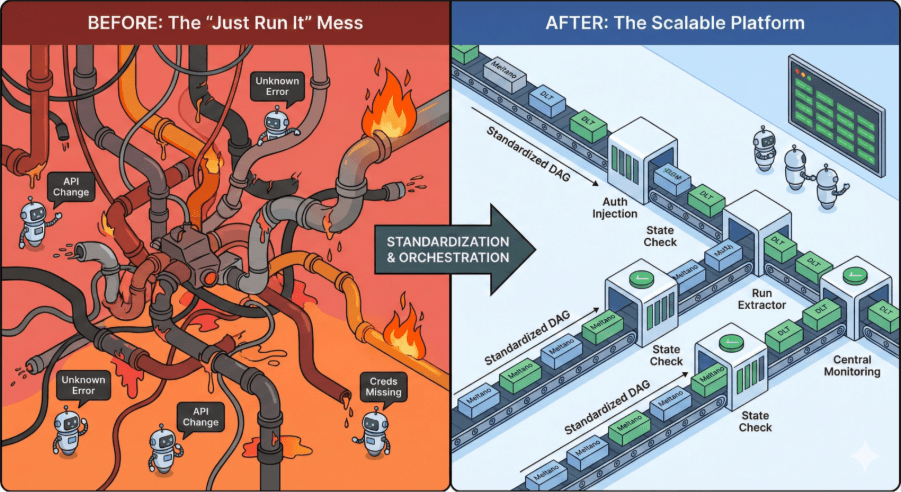

At this point, it wasn’t a connector problem.

It was a connector system problem.

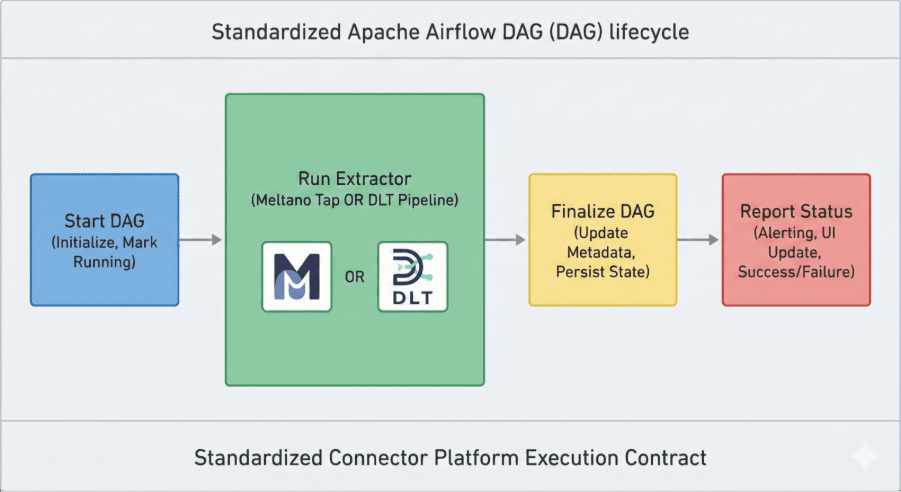

Here’s what I learned fast: connectors don’t scale when orchestration is inconsistent. In practice, connector orchestration with Airflow DAGs mattered more than the connector implementation itself.

A sync job can’t just “run a tap.” It must behave predictably so the platform can:

So, we standardized the Airflow execution lifecycle into a simple pattern:

start → run extractor → finalize → final status

“This is the same reason ETL control logic matters retries, idempotency, and consistent failure handling are what keep production pipelines stable.”

For Meltano, that meant:

This sounds like a small thing, but it solved a huge scaling issue:

Even when connectors fail in unique ways, the system handles failure in a consistent way.

That’s what “scale” actually is.

As we went deeper, there were cases where “use an existing Meltano tap” became less ideal:

That’s where DLT came into the picture.

Not as a replacement for Meltano - but as a second execution path for connectors where we needed more flexibility.

Writing a DLT pipeline is not that hard.

The hard part is this:

If DLT runs differently than Meltano, you now have two systems, two failure models, and two operational playbooks.

That’s how platforms become messy.

So the real goal became:

That meant DLT jobs had to:

We literally reshaped the DLT DAG to match the Meltano DAG structure.

So instead of “a custom DLT task”, it became:

start_dag → run_dlt → finalize_dag → final_status

And this mattered more than any implementation detail, because it kept the platform coherent.

Once we had Meltano and DLT both running under the same orchestration contract, the next scaling problems were mostly predictable - and solvable by standardization.

Connectors fail constantly when secrets aren’t managed cleanly across environments. This is one of the most common modernization pitfalls we see (and it compounds technical debt fast), which we cover in Top 10 Costly Mistakes to Avoid During Cloud Modernization.

So, part of making this scale was ensuring credentials could be:

This is unglamorous work, but without it, “scaling connectors” is a lie.

Treating connector state management as a platform concern was critical for reliable incremental data loads.

Meltano and DLT both manage state differently.

To scale, we treated state as a platform concern:

Schema drift handling became non-negotiable for long-running data connectors tied to external APIs.

External APIs change. The system must assume:

Scaling meant adding guardrails so schema issues show up early and don’t silently corrupt the pipeline.

Once Meltano and DLT ran under the same DAG lifecycle, debugging became far more systematic:

That’s the difference between “hero debugging” and “platform debugging”.

We didn’t end up choosing Meltano or DLT.

We ended up with a connector platform where:

The scaling win wasn’t throughput.

It was confidence:

Adding connectors stopped feeling risky.

Meltano helped us move fast.

Airflow DAG standards helped us stay sane.

DLT helped us extend the platform without turning it into a forked-connector graveyard.

Scaling connector libraries isn’t about writing more connectors.

It’s about making sure every connector - no matter how it’s built - behaves predictably when it runs at 2 a.m. on a schedule.

That’s what makes it real.