- Srinivasa Rao Pampana

- November 20, 2024

July 21, 2025

Data engineering

Srinivasa Rao Pampana

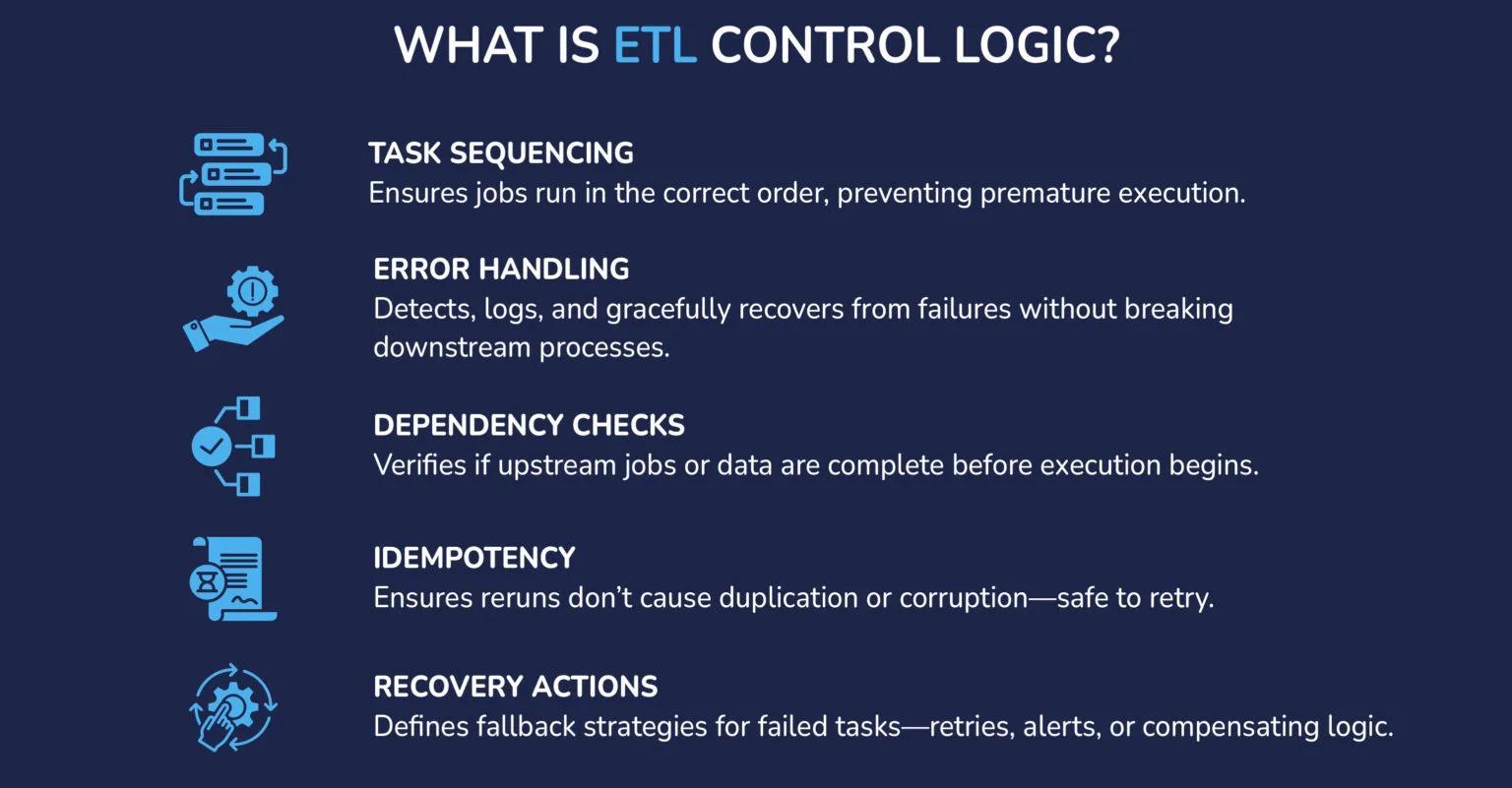

Reliable data pipeline needs to be engineered, not just assembled, and intelligent orchestration is what holds them together. Before you initiate the process of building data pipelines, it’s mandatory to know what is ETL? It stands for Extract, Transform, and Load, a fundamental data integration process that pulls raw data from various sources, transforms it into a usable format, and loads it into a destination system like a data warehouse. This is why control logic is important; it can run tasks in the right order, catch problems early, and keep your data clean.

You cannot miss control logic. For example, a data pipeline you build resembles a high-speed train built for speed and style, but missing signals, track control, and emergency systems result in breakdowns, delays, and disasters. That’s exactly what happens in data engineering when ETL (Extract, Transform, Load) pipelines lack control logic. While data teams obsess over tools, cloud platforms, and transformations, the real guardian of reliability is equally important. The thing that prevents midnight firefighting is control logic.

The orchestration layer of your ETL pipeline is control logic. It dictates task order, dependencies, and handles failures. While orchestration tools like Airflow or Prefect manage execution, control logic is what ensures consistency, stability, and error resilience across the workflow.

A pipeline without logic is like a machine running on autopilot. It performs tasks, but can’t adapt, recover, or make decisions when things go off script.

Here’s how control logic shape real ETL outcomes.

Tech Tip: Avoid relying solely on naming or implicit ordering. Instead, use explicit DAG definitions or task chaining.

What Triggers When a Task Fails? will you try again? or just send an alert.

For tricky executions, Control logic allows configuration of:

Tech Tip: Use try/except blocks within custom Python operators, or failure hooks in Prefect for structured fallbacks.

Just because Task B can run doesn’t mean it should be. Control logic defines hard vs. soft dependencies.

Example, you may want to:

Tech Tip: To define conditional execution, use trigger_rule in Airflow (e.g., TriggerRule.ALL_SUCCESS,ONE_FAILED)

Control logic is the silent enforcer of order in your data stack. Without it, chaos creeps in fast. This is essential for pipelines with retry logic or manual re-runs.

Tech Tip Design with immutable raw layers + overwrite-safe transforms. Preferably use merge operations, deduplication keys, or “UPSERT” strategies in SQL/data warehouses.

What does your pipeline do when something unexpected happens?

If the control logic is implemented right, then your pipelines begin to respond instead of reacting with the intent.

Enables self-healing pipelines without constant on-call involvement

Tech Tip: Alert thresholds combined automatic retries within to prevent infinite retry loops (e.g., alert on 3rd failure).

Most ETL pipelines rely on DAGs (Directed Acyclic Graphs) via tools like Apache Airflow, Prefect, or Dagster. But the logic connecting those tasks to the conditions, dependencies, and failure protocols is what determines resilience.

Let’s dicuss with an illustration where an ecommerce global platform deals with everyday inventory and sales.

Sales data loads before inventory → misreported revenue

Failed ETL goes unnoticed → C-level reports wrong numbers

Once you neglect the control logic, the cracks don’t show up in the development phase but tend to reflect in production.

Too often, engineers treat control logic as “plumbing.” The results could be

A bank’s ETL job silently fails overnight → Loan decisions made on stale credit scores

A SaaS provider duplicates transactions during a retry → Customers get double-billed → PR nightmare

1. Use Robust Orchestration Tools: Apache Airflow, Dagster, Prefect, AWS Step Functions, dbt + Airflow.

2. Use DAGs, SLAs, and dynamic workflows to model real-world dependencies.

3. Implement Checkpoints & Transactions:

4. Plan for Failure:

5. Make It Idempotent:

6. Monitor Beyond “Success” or “Fail”:

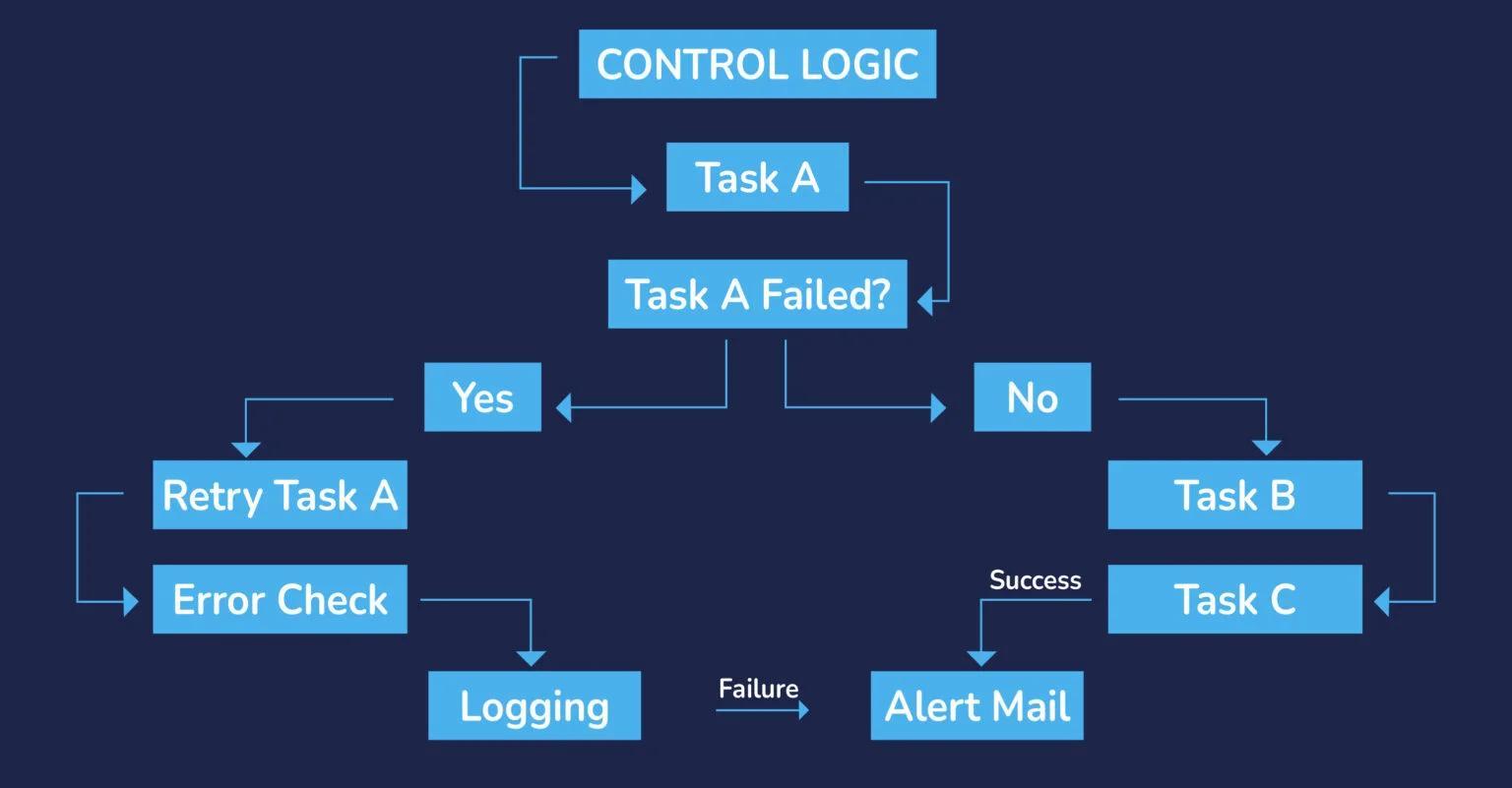

Here’s a simplified flowchart to illustrate a basic control logic pattern:

Here’s a quick control logic checklist you can apply today:

Control logic isn’t a back-office function; it’s the core of reliable data engineering. It’s amid the automated data platform and a chaotic mess of panicked calls, corrupted reports, and angry stakeholders.

If you’re designing pipelines that need to scale globally, ETL best practice is to serve real-time dashboards, or feed machine learning models you can’t afford to treat control logic as an afterthought.

Know more about our journey, Read our in-depth blog on migrating legacy ETL pipelines to Apache Spark for scalable, reliable data processing.